On Wednesday night I had the privilege of speaking at BERG’s Tomorrow’s World, an evening of talks about the near-future of software, culture, networks and things.

Here’s a longer, better referenced version of what I said then.

It’s my contention that the near future of science is all about honing the division of labour between professionals, amateurs and bots. (Please note that I’m not a scientist so this is speculation for BERG and friends, informed by conversations with scientists and explored through my own team’s digital science projects at the Royal Observatory.)

The first of those projects, Astronomy Photographer of the Year, is an annual competition and exhibition, which is powered by Flickr.

It’s extraordinary what amateur astronomy photographers can capture now with their own – albeit expensive – kit.

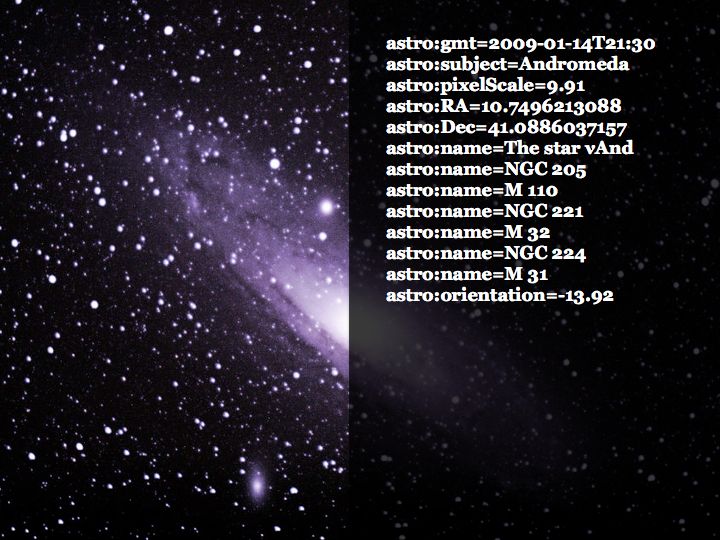

Selecting Flickr as our platform for the competition immediately got us to ask, what would be the space equivalent of geotagging? Astrotagging, obviously. If astrophotographers were to accurately describe what their photo depicts, and where in space that is, we could create a user-generated map of the night sky. But – as you might have already been thinking – working out where you are in space is much trickier than putting a pin on a map because there are the added dimensions of depth and movement. In addition to the space equivalents of longitude and latitude (RA and Dec), we required pixel scale and orientation.

Would anyone really go to the trouble of figuring out and tagging all of that information? Probably not. We were going to need a bot.

Fortunately Flickr isn’t just ‘a great place to be a photo’, the API also allows you to develop bots that act autonomously for a user or a group. Early bots in use on Flickr include Hipbot and HAL. Hipbot, for example, automates some of the moderation tasks in the well-defined squared circle Group, automatically removing photos that are not square, or are too small.

We worked with the scientists at Astrometry.net to introduce a custom robot to the competition’s Flickr group. It adds astrotags to all of the pictures in the group, using the geometry of the stars.

How does it work?

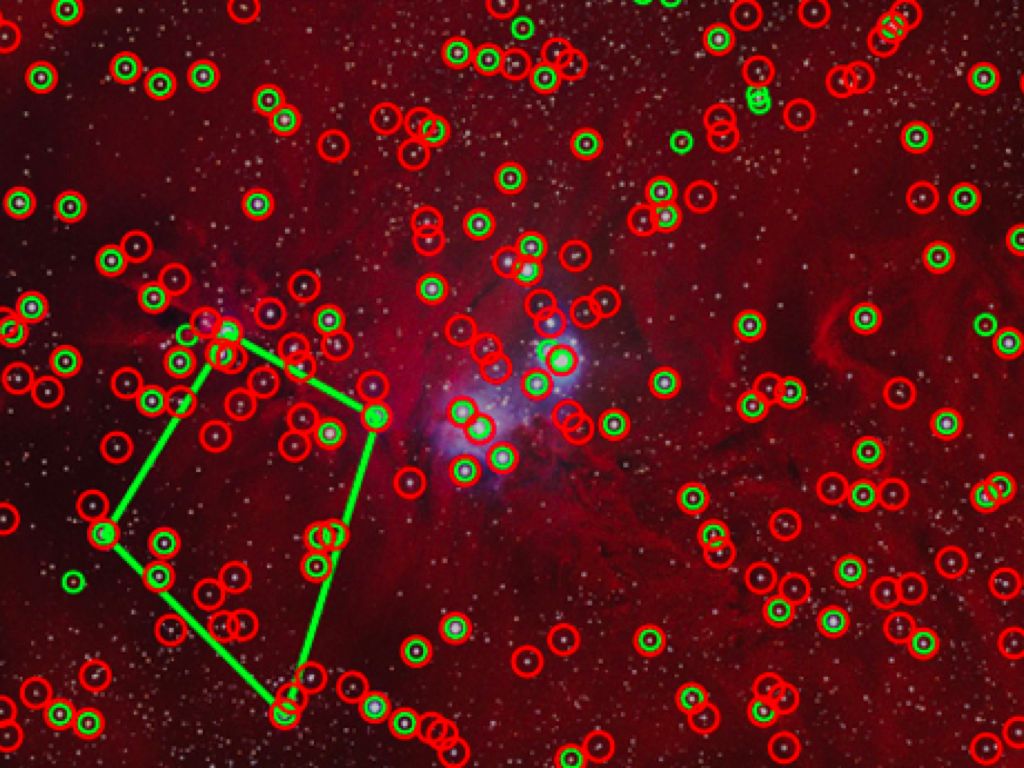

The robot starts with a large catalogue of star positions. For each picture it runs some image processing steps to find stars (the green circles). Next, it starts looking for sets of four stars in a photo (the green lines). For each set of four stars, it checks for a match in the ‘skymarks’ index. When the robot finds a skymark that seems to match, it does some cross-checking: ‘where else would I expect to see stars in this image?’ (the red circles). Lots of red and green circles overlap in this image, so the Astrometry bot must have found a match.

The robot can then identify objects and tag the photo.

Incidentally, as the robot works through more photos it’s building up a bigger and better index of skymarks.

The whole ‘astrotagging’ process is summarised really well in this short film that we commissioned from Mike Patterson and Jim LeFevre:

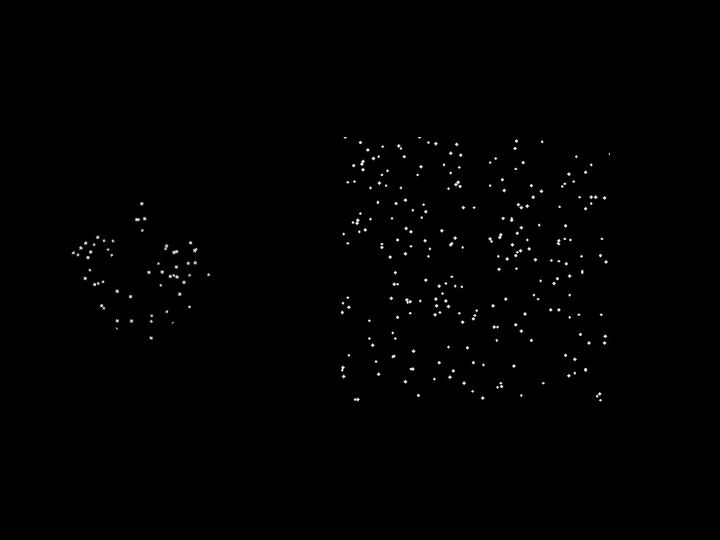

The Astrometry bot is an excellent demonstrator of what computers are particularly good at. This is a task that would take humans a long time, with a much greater chance of error. You try: find this ‘field’ (left) in this ‘sky’ (right).

But, clever as it is, there are some things the bot can’t do – like pick up moving things such as planets and comets. For example, the Astrometry bot doesn’t see Comet Holmes in this photo, even though it’s the main subject and clearly remarkable to the human eye!

So, for our astrotagging project we eventually arrived at a human-bot collaboration. The bot does the tricky bit and humans pick up the things the bot can’t do – like labelling moving things.

This idea of the remarkable power of the human eye leads to our next project: citizen science.

Citizen science projects use the time, abilities and energies of a distributed community to analyse data. Data that is too vast to be addressed by academic capacity and that can’t be interpreted by computers either. The simplest example of ‘citizen science’ in action is reCAPTCHA. Cumulatively, we spend 150,000 hours every day completing CAPTCHAs to convince computers that we’re human! reCAPTCHA puts this to positive use, transcribing books.

Here’s a scan of a book…

This is what happens when it’s run through an Optical Character Recognition (OCR) system.

Hmmm. That doesn’t look like a great read. And here’s what happens when you get a single person to do it.

Through a sophisticated combination of multiple OCR programs, probabilistic language models, and multiple transcriptions by people, reCAPTCHA is able to achieve over 99.5% accuracy.

A more sophisticated – and voluntary – example is Galaxy Zoo, which was launched in 2007 by Chris Lintott and others. Galaxy Zoo invites members of the public to classify images of galaxies, answering one simple question: does a galaxy look spiral or elliptical?

The project delivered more than 100 million classifications of galaxies (a data-set that was considerably better than could have been produced by a small group of experts), producing more than 20 peer-reviewed science papers, and making discoveries that hit the news headlines.

A different collaboration of scientists, led by David Baker, produced a game called FoldIt in 2008. FoldIt invites members of the public to manipulate different parts of a protein to optimise its 3D structure. It’s less about classification and more of a design and puzzle-solving challenge.

And it’s this use of human capacity that is important. When I talk about citizen science I don’t include distributed computing. Popularised by the SETI@home project, distributed computing divides a problem up into many tasks, each of which is solved by one or more computers. In SETI, computers sift through radio telescope data in search of alien signals. Countless others followed, such as Folding@home and Climateprediction.net. But people just download a screensaver to volunteer computer cycles.

Interestingly, FoldIt was actually inspired by distributed computing. The FoldIt scientists initially released a distributed computing program, Rosetta@home, but people running the screensaver were frustrated by its painfully slow progress, as it ran through thousands of variations. Humans, with their highly-evolved talent for spatial manipulation, could often see the solution intuitively. It’s the same kind of frustration you experience when watching a Roomba clean a room in a psuedo-random way, which is ultimately effective but appears dumb to people.

The Rosetta@home volunteers started saying, ‘I can see where it would fit better.’

And it turns out that top-ranked FoldIt players – with little or no biochemistry training – can fold proteins better than a computer can. FoldIt players even beat protein experts. A protein that had not been solved for 15 years was recently resolved by FoldIt players in just a couple of weeks. This is thought to be the first example in which non-scientists have solved a long-standing scientific problem – and this is one that has implications for the design of better AIDS drugs!

The Museum’s first citizen science project, Solar Stormwatch, invites members of the public to spot explosions on the Sun and track them across space to Earth, using near-real-time video data from NASA’s twin STEREO spacecraft. Stormwatch volunteers mark any visible solar storms in the STEREO videos, and then trace the progress of a storm through composite images to calculate an accurate speed and direction. This feeds into a user-generated space weather forecast on Twitter. Our first alert was published in December last year and reported in the press, including – appropriately – The Sun.

The Museum’s second project, Old Weather, invites members of the public to help extract meteorological data from historic shiplogs. This extracted data is used to reconstruct past weather and improve climate model projections.

All citizen science projects start with well-defined tasks that answer a real research question. In Galaxy Zoo, ‘is a galaxy spiral or elliptical?’ In Solar Stormwatch, ‘is that a solar storm and is it headed toward Earth?’ But when you expose data to a large number of people, you also open that data up to serendipitous discovery. As BERG’s Matt Jones often quotes, ‘serendipity is looking for a needle in a haystack and finding the farmer’s daughter.’ In Galaxy Zoo, there was Hanny’s Voorpwerp: an unusual, ghostly blob of gas that appeared to float near a normal-looking spiral galaxy.

By following her own curiosity, Hanny Van Arkel, a 25-year old Dutch school teacher, had discovered a new cosmic object.

The power of human curiosity is illustrated again by the study of the ‘Green Peas’. The Peas were not part of Galaxy Zoo’s original brief or decision tree but the citizen scientists were intrigued by these objects, started collecting them, and launched a campaign to attract the scientists’ attention: ‘Give peas a chance’.

The Peas were eventually found to be a new class of astronomical object. It’s one thing to harness clicks. It’s another to truly harness people power: the unique ability of humans to ask, ‘What’s that weird thing over there that you didn’t ask me to look at?’

This was more explicitly invited in Galaxy Zoo 2, with the introduction of the ‘Is there anything odd?’ option.

We introduced a whole data-analysis game in Solar Stormwatch, ‘What’s that?’ And in Old Weather, where the focus is on transcribing weather data, there’s an additional field for ‘events’ – anything that volunteers find interesting.

A group of Old Weather volunteers is tracking the relationship between the ‘Number on Sick List’ in the logs and the ‘Spanish flu’ outbreak 1918.

Given that’s what we’ve seen so far, what does the ‘near future’ look like?

WHAT’S NEXT FOR CITIZEN SCIENCE?

- Much more of it due to the imminent ‘data deluge’ (pdf). The next generation of experiments, simulations, sensors and satellites is making every field of science data-rich.

- More citizen-initiated research as science data becomes open by default.

- Integration of machine learning. Citizen scientists handing off training data-sets and routine tasks to computers and then cross-checking the results.

- Citizen scientists controlling ‘big science’ instruments, mesh networks of sensors, and scheduling lab time.

As citizen science becomes a routine part of how science gets done, there will be more competition for volunteers. The collaboration that produced Galaxy Zoo, Solar Stormwatch and Old Weather (the ‘Citizen Science Alliance’) already has education researchers studying motivations, which, in Galaxy Zoo, range from an interest in the science, to awe and aesthetic attraction, and the thrill of discovery.

There is also an emerging ethics: every task needs to have a real scientific research purpose; professional researchers should enter into discussion with the volunteers; volunteers should be respected as collaborators and recognised as co-authors on academic papers; and the projects must never waste clicks.

The requirement ‘not to waste clicks’ actually provides some of the impetus for the integration of machine learning, which has already been piloted in Galaxy Zoo. Galaxy Zoo used ~10% of its classified galaxies to train an artificial neural network, which was then able to reproduce classifications for the rest of the objects to an accuracy of greater than 90%. Of course the neural network is not as good as the human eye in recognising unusual objects but it worked for the bulk of the galaxies.

The pilot also suggested that these training sets would be effective when used on the more distant (and therefore faint) galaxies that will be imaged in future surveys.

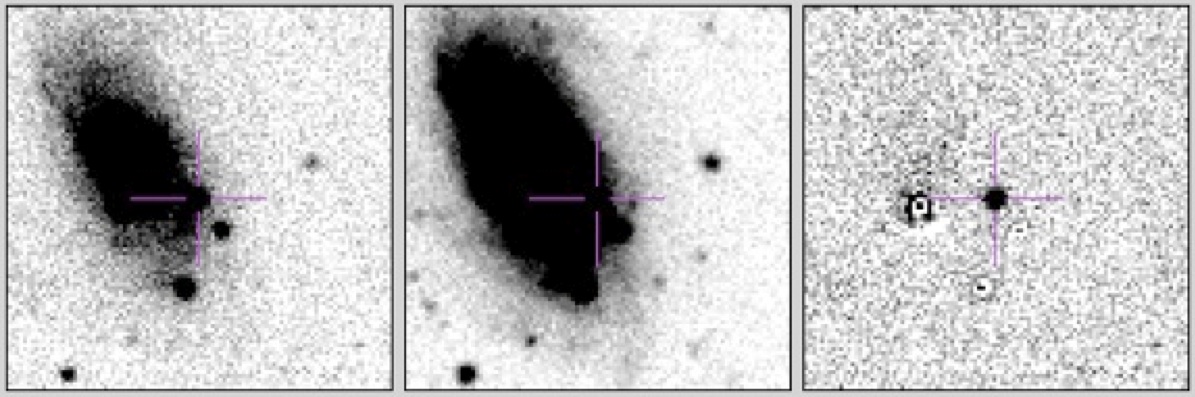

Citizen scientists will be (indirectly and directly) controlling the instruments of science. Here is Hanny’s Voorpwerp again, this time deliberately imaged by the Hubble Space Telescope in response to Galaxy Zoo interest in the object.

This image was used to confirm the theory that Hanny’s object was a light echo from a quasar that burnt out over 100,000 years ago. Hanny directing the Hubble… that’s a genuine outbreak of the future.

Increasingly, citizen scientists’ classifications will be used to decide what data to keep and what objects to target or test. The Galaxy Zoo Supernovae project invites the public to evaluate candidate supernovae for further investigation by scientists stationed at telescopes around the world.

Volunteers are shown three images and follow a decision tree to filter out unlikely candidates.

Each candidate is classified by multiple people and given an average score, with the candidates ranked and made available for further investigation in real-time. Interesting candidates can be followed up on the same night as they’re discovered. Once again, the classifications have been shown to be very good. And once again, these human classifications can be used to improve the machine learning algorithm for automated classification.

The makers of FoldIt recently launched EteRNA where amateurs are invited to design RNAs (the tiny molecules at the heart of every cell), to create the first large-scale library of synthetic RNA designs in order to help scientists control disease-causing viruses.

If you win EteRNA’s weekly design competition, your RNA is synthesised in a lab and scored by how well it folds. People playing something that feels like a game are determining a lab’s testing programme.

So, the ‘near future’ is all about honing the division of labour between professionals, amateurs and bots. Already, the Citizen Science Alliance is analysing which contributors (or algorithms) consistently get classifications right, in order to weight responses, or assign more challenging tasks. And building a new software layer to manage workflow in real-time, which is particularly important for rapid response situations such as observing runs. But in all of this, it’s vital that we don’t engineer out the human factors like serendipity and reward.

(This post was heavily influenced by my collaborations and conversations with Natasha Waterson, Chris Lintott and Arfon Smith. Any mistakes are my own.)